Back in April this year, Dr David Johnson from the ISA team gave a presentation on “Data Infrastructures to Foster Data Reuse” at a workshop on Integrating Large Data into Plant Science: From Big Data to Discovery hosted by GARnet (the UK network for Arabidopsis researchers) and Egenis (the Exeter Centre for the Study of the Life Sciences). The workshop was held at Dartington Hall in Devon, South West England, and was well attended by researchers from the plant and biological science community worldwide as well as representatives from industry from organisations such as Syngenta.

David presented on ISA, as well as on biosharing.org, as candidate data infrastructure resources for enabling data reuse in the plant sciences, as well as presenting an example of how one might encode high-throughput plant phenotyping in ISA tab.

We have observed the uptake of the ISA tab format across the broad range of life sciences, but view its adoption, with a view to making data FAIR (Findable, Accessible, Interoperable and Reusable), in the plant sciences as essential for the field. In particular centres such as the UK’s National Plant Phenomics Centre in Aberystwyth, Wales, could benefit hugely from adopting ISA where there are emerging challenges in data management, in particular as automation of data collection is a significant driver in modern plant-based research and agritech.

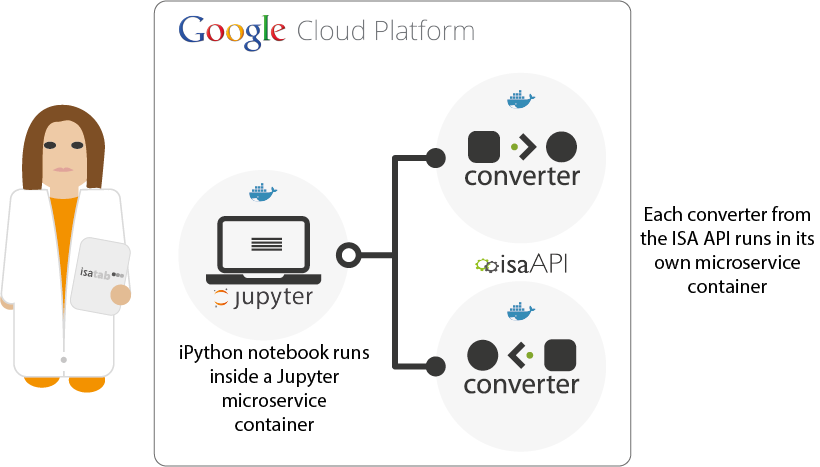

There are also existing data analysis platforms such as Araport (the Arabidopsis information Portal), TAIR (The Arabidopsis Information Resources) and BioDare (Biological Data Repository) that could benefit from standardizing their experimental data, as well as ongoing efforts to create open data resources in the plant sciences, such as the Collaborative Open Plant Omics (COPO) project, that will be using the new ISA JSON format as native data objects.

You can check out David’s presentation on SlideShare.